Giant sea otter at Point Lobos outside of Carmel, California

ProcessedVIDEO0015 mpeg2video

May 4th, 2011 | Posted by in Uncategorized - (Comments Off on ProcessedVIDEO0015 mpeg2video)Real-time fastener recognition

April 12th, 2011 | Posted by in augmented reality | classification | Columbia | computer vision | demo | lab work | machine learning | OpenCV | pics or it didn't happen - (Comments Off on Real-time fastener recognition)I’ve had this idea, that I have been working on for awhile, to make a system that can recognize fasteners (screws, nuts, bolts, nails, washers, etc) on the fly and then measure some of the descriptive statistics of the parts (e.g. length, width, inner and outer diameter etc). Today I finally got a prototype of the recognition system up and running. The system uses a raw threshold of the scene to extract the parts from the scene background and then does some operations to get the parts into a standard form (namely aligned to the major axis) and then extracts some basic statistics like edge length and orientation histograms, and Hu moments. This feature vector then gets piped into a support vector machine to do the recognition. Right now the system runs at about 8FPS at full resolution. The training error on the SVM was about 13% but the training data was really, really, poor and not that large (i.e. 75 samples with about 10% of those being basically misfires from an automatic data extraction module I wrote). I still have a long way to go, as the feature extractor could use some work and the whole data processing pipeline needs to be optimized. Right now there are some fairly costly image rotation operations that can be modified to improve performance. I also need to train the full set of features not just bolts and nuts.

Once the recognition system is working well I hope to use the ALVAR augmented reality library and its fiducials to determine the part dimensions by assuming that the part is effectively planar with the fiducial. The fiducial should also give us a three dimensional location for the recognized part. Right now I am doing this work for the CGUI lab at Columbia. Our end game is to wrap this code up into an augmented reality system for maintenance applications where there may be knowledge shared between a remote subject matter expert and an on-site maintenance technology. Our hope is that a system like this can speed up maintenance tasks by assisting the maintainer in identifying parts and locating them faster. Part of the problem of using AR in this domain is that head mounted displays really don’t have all that great of resolution which reduces your visual acuity and makes it difficult to recognize individual parts.

Classifying Images

March 25th, 2011 | Posted by in C++ | classification | code | Columbia | computer vision | machine learning | OpenCV | segmentation - (Comments Off on Classifying Images)I have been burning the midnight oil finishing up a project for my Computational Photography course at Columbia University. For this project we had to make two classification systems, one which classified beach and grassland imagery using a given feature vector description, and a second classifier for any two objects using whatever technique we wished to generate the feature vectors. It was suggested that we do our work in Matlab, but we had the option to work in C++. I opted for the latter as I really wanted to write something that I could possible re-use in another project. The final system was developed under Windows using Visual Studio 9, and makes liberal use of OpenCV 2.2 and LibSVM 3.0.

The beach / grassland images were classified by dividing the image into a three by three grid and calculating the color average, color standard deviation, and color skew for each of the HSV channels. This feature vector was then used in a support vector machine with a linear kernel. The overall error rate was 13.33%. For the beach images 11.67% were misclassified as grassland, while 15.00% of the grass images were classified as beach. The classification is written in the top, left corner. If the image was misclassified there are two values listed. The red value is the classification, and the green value is true value.

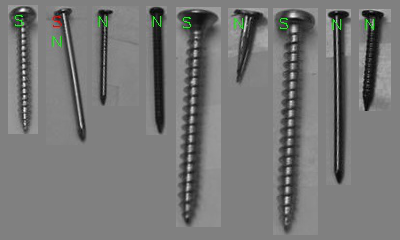

For the second part of the project I wrote a system that classifies images as either screws or nails. The system assumes that both of these objects are aligned to be roughly vertical. I wrote a separate class awhile back that would re-orientate the images based on the major axis of the extracted contour. To do the classification I first thresholded the gray scale image and then extracted the resulting contour. After doing this a few morphological operations were performed on the contour and the Hu-moments and a few other statistics were calculated. I also applied the Canny edge detector to the images and piped the results into a Hough line detector. The results of the line detector were then binned according to length and orientation. This data was used to generate a feature vector which was used for classification via a support vector machine with a linear kernel. The overall error rate was 3.58%. 1.33% of the screw images were misclassified as nails, while 7.27% of the nail images were misclassified as screws. The classification is written in the top, left corner. I used the letter “N” to indicate nails, and the letter “S” to indicate If the image was misclassified there are two values listed. Some results are shown below.

The complete set of beaches and grassland images can be found in my beach / grassland classification set on flickr along with the complete set of screw/nail classification results. The code is posted on my computational photography Google code page. The code was written under the gun so it isn’t nearly as clean as I would like it to be, and everything is very data set specific. Hopefully once the semester is over I can go back and refactor it to be a more general solution.